Of all the historical topics to write about, WWII is the most daunting. There is so much interest, and people know it so well that it’s hard to say something new. Thanks to some recent research, I’m optimistic I have something to add. But I’d love to take advantage of my growing readership. Please leave comments on other things I should read or consider!

I’ve always been fascinated by the idea that the Second World War changed art forever. Though I’m not qualified to say, there seems to be something of a consensus that after WWII, the visual arts became more about art itself than their subject.1 Though it’s easy to talk about the change in styles after WWII - Cubism, Expressionism, etc. - as any economist will tell you, pinning down the causal mechanisms is trickier. Why did art change? What about our tastes shifted after the War?

Whatever happened in art, technology shifted dramatically—tectonically. Unlike normal people, I find the First World War more interesting than the Second, precisely because it seems so old-fashioned. WWI actually had horses and used terriers to send messages in the trenches. Thirty years later, tanks and sonar had revolutionized warfare. WWI was a bizarre melange of old and new, with royalty leading men on horseback while tanks were being brought to the frontline. WWII, even eighty years later, still feels very modern with huge naval vessels, the importance of air superiority, and the heavy reliance on what we now broadly call ‘data science,’ but was then code cracking, logistics, and, frankly, women’s work.2 The technological jump between the two wars is almost unbelievable to this day.

But the technology of WWII isn’t just a subfield; it’s an entire world of work that’s captured the public’s attention since at least Pearl Harbor. So, my goal here isn’t to go through the key technologies of WWII but to discuss the enormous lasting effect the War had on our technological trajectory.

WWI: The Usual Story

To set the stage, I think it’s helpful to return to my pet interest in WWI and talk about the dog that didn’t bark. It would be wrong to say that technology played no role in WWI—technology has been critical for every battle since we left the caves, and perhaps before—but WWI is not typically remembered as a fight over technology. As Peter Jackson captures in the incredible documentary They Shall Not Grow Old, from the British perspective, English men went off to fight and returned to their farms much the way they’d left them. The soldiers themselves had been profoundly changed in a way they could not communicate. But the world around them seemed much the way they’d left it.

Just to illustrate exactly where technology was circa 1914, consider the reliance on animals. The British actually used dogs to carry messages in the trenches. Now, as a proud owner of a terrier, I would sooner expect the enemy to deliver me a message himself than my dog. The fact that dogs were even tried, let alone that they figured out a way to train the dogs to a level where they were worth the effort, provides a good sense of the state of the technology at the time. Similarly, horses played a significant role in delivering people and goods to the front. Sadly, a recurring theme in They Shall Not Grow Old is the stench of the rotting horse carcasses trapped in the mud around the trenches.

By WWII, the reliance on animals was gone. In its place were planes, ships, submarines, sonar, and all sorts of technologies now made famous in WWII movies. These efforts weren’t peripheral; they were the core of the war. The United States’ superior tank production was fundamental to its strength, and the cracking of the U-boat’s code was essential to bring the war to a close. Finally, the bombs of Hiroshima and Nagasaki ended the technological story of WWII with perhaps the loudest bang the world has ever heard.

WWII: The Technological Origins Story

But where did this technology come from? Typically, this story is told as a series of momentous events, with two of the classic stories being Alan Turing in the UK and J. Robert Oppenheimer in the US. As each of their stories deserves at least one post, if not an entire book, I won’t attempt a detailed account of each of them. However, it is helpful to think of their contributions as a sort of bookends on the types of technologies developed during the War.

Oppenheimer, of course, has become a household name again thanks to the Christopher Nolan film last year. The film, based on Kai Bird’s American Prometheus, chronicles Oppenheimer’s unlikely journey from pathbreaking physicist to brilliant executive. The first half of the film (my favorite part, if we’re being honest) chronicles his assembling perhaps the greatest team of physicists the world has ever known to create the nuclear bomb. As the film shows, the scale of this achievement is almost unimaginable. The advances required in physics and equipment, to say nothing of the ramifications for the future of warfare, are hard to exaggerate.

Nonetheless, there is something teleologically pleasing about Oppenheimer’s work. Like it or not, the nuclear bomb extended human warfare in a sort of linear direction. Humankind has always sought bigger weapons that could kill more people. From sticks to spears, bows to guns, and cannons to bombs, human history is punctuated by improvements in weapons. The nuclear bomb was, in essence, a sort of exclamation point to this process. We had arrived at a place where we could destroy the world. Oppenheimer realized this, of course, famously saying lines from the Bhagavad Gita, “Now I am death, the destroyer of worlds.”

If Oppenheimer represented the extreme end of humankind’s very physical innovations in war, Turing sits on the opposite end of the spectrum. His achievements, displayed in the excellent movie Imitation Game, for those looking for a counterpart to the Nolan film, were almost entirely cerebral. Working at the famous Bletchley Park, Turing is most famous for cracking the Nazi code created by the German Enigma machine. But, to do this, he had to develop a computer capable of crunching millions of combinations to arrive at an intelligible answer. The first such computer of its kind.3

Turing’s work extended far beyond code-breaking, however. He forever left his mark on computer science through a series of theories on computers' strengths and limits. Perhaps the most famous of which is the Turing test, which has recently reached new levels of importance with the rise of LLMs. Though less visually arresting than the bomb at Los Alamos, Turing’s ideas and creations shaped the development of digital technologies for the next century.

Some New Contributions

Though these two are perhaps the best-known stories of the Second World War, they are hardly the only ones. From the improvements of planes to innovations in shipbuilding, manufacturing, and chemicals, WWII shaped many innovations. Thankfully, recent work by economic historians Dan Gross and Bhaven Sampat helps us see exactly how large this change in trajectory was.

Gross and Sampat do this through a series of studies examining the US Government’s Office of Scientific Development and Discovery (OSRD), run by Vannevar Bush (yes, related, albeit very distantly, to George W. Bush). This little office received only about $6.2 million in annual funding in 1939 (about $140M in today’s dollars). But, by 1947, this figure had grown to over $132 million (about $3.2B today). The OSRD’s missing was to advance science so the government could win the war, and it did so by funding research at various institutions across the company.4

And fund it did! As the graph above shows, WWII nearly tripled the percentage of US patents funded by the government. The key point here, evidenced in the stories of Turing and Oppenheimer but measured by Gross and Sampat, is that governments knew they needed technology to win the War and invested heavily in it.5

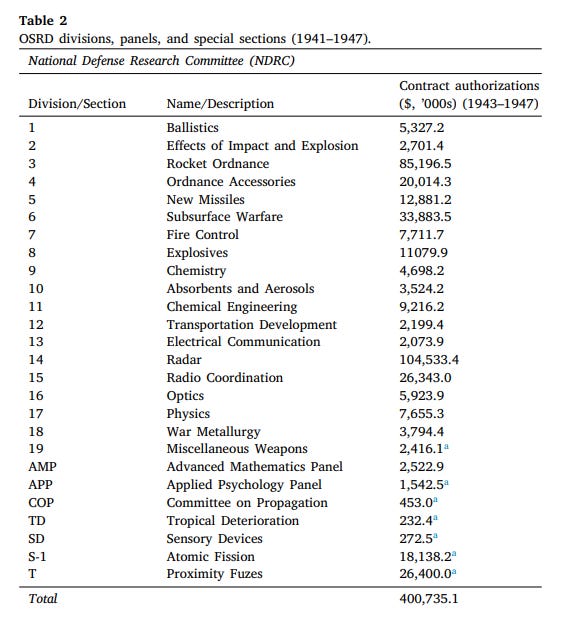

But where did they spend this money? As you’d imagine, on all sorts of innovations that would interest the military.6

In looking at where the government spent its research budget, there are some obvious things on the list - ballistics, for instance. But there are some surprising things as well: Radar, radio, and optics, for instance. While this may not sound surprising to a reader today, that is because we’re primed to think of cyberwarfare as warfare. These were nascent fields in the 1940s. The first “computer science” department did not occur until Purdue University in 1962, with the UK following at the University of Manchester in 1964.

What’s perhaps most interesting about Gross and Sampat’s research isn’t just the list of topics the government invested in; it’s also the relative funding amounts. Ballistics, certainly critical to warfare, received $5.3M (about $75M in today’s dollars). By comparison, radar received nearly 20 times that amount, equating to about $1.5B. For reference, the OSRD also funded the initial research that went on to create Oppenheimer’s Manhattan Project, but it spent less than a fifth on atomic fission than it did on radar. In fact, it spent more on radio than atomic fission, vividly demonstrating the government’s priorities. 7

Ramifications

While it’s tempting to conclude simply that WWII saw a lot of innovation and even that it sped up the rate of innovation, the bigger question is whether these investments shifted the trajectory of innovation.

Gross and Sampat find evidence of this geographically.8 Middlesex County, in Massachusetts, was not an electronics hub before the war. However, thanks to substantial government investment seeking to leverage nearby Harvard and MIT, by the end of the war, it was patenting at twice the rate it was before the war. Critically, this trend has continued, making it well-known for “tech” today.

Beyond geography, by investing heavily in the types of innovations that would become “computer science,” the government laid the foundation for the technological revolution we’re living through today. Though the counterfactual is difficult to measure, and outside of Gross and Sampat’s work, it’s hard to imagine that we’d have made the same level of progress in computing without the earlier advances in technology. This is certainly true if we add in subsequent government investments, which I discuss in my posts on semiconductors and IBM.

What innovations would we have had without the War? This is a billion-dollar question and not one that I can provide a concrete answer to. Unlike art, it’s harder to see the types of works or schools of thought that might have continued had the War never happened. Things like flight and telecommunications (telegraph at the time) were already experiencing significant private investment, so the War seems likely to have only sped those up. It’s harder to envision the private sector seeing an enormous need for cryptography at the time. Or, later, integrated circuits. The counterfactual remains intriguing, but, at least to my reading so far, unknowable.

For example, see: M.S. Rau. 2022. “The Power of Post-War Art.” November 30, 2022. https://rauantiques.com/blogs/canvases-carats-and-curiosities/the-power-of-post-war-art.

Fessenden, Maris. 2015. “Women Were Key to WWII Code-Breaking at Bletchley Park.” Smithsonian Magazine, January 27, 2015. https://www.smithsonianmag.com/smart-news/women-were-key-code-breaking-bletchley-park-180954044/.

See: “BBC - History - World Wars: Breaking Germany’s Enigma Code.” 2011. February 17, 2011. https://www.bbc.co.uk/history/worldwars/wwtwo/enigma_01.shtml.

Gross, Daniel P., and Bhaven N. Sampat. "The World War II Crisis Innovation Model: What Was It, and Where Does It Apply?.?" Research Policy 52, no. 9 (2023): 104845.

Gross, Daniel P., and Bhaven N. Sampat. "America, Jump-Started: World War II R&D and the Takeoff of the US Innovation System." American Economic Review 113, no. 12 (2023): 3323-3356.

Gross, Daniel P., and Bhaven N. Sampat. "Crisis Innovation Policy from World War II to COVID-19." Entrepreneurship and Innovation Policy and the Economy 1, no. 1 (2022): 135-181.

Gross and Sampat (2022)

Gross and Sampat (2023a)